Table of Contents >> Show >> Hide

- OCR, Explained Like You’re Busy

- Why OCR Matters More Than You Think

- How OCR Works: From Pixels to Words

- Types of OCR: Printed Text, Handwriting, and “Why Is This Form Designed Like That?”

- OCR vs. Similar Technologies (So You Don’t Accidentally Buy the Wrong Buzzword)

- What Affects OCR Accuracy?

- Common OCR Use Cases (With Concrete Examples)

- OCR in Real Life: Practical Tips That Save Your Sanity

- Security, Privacy, and “Should We Upload This?”

- OCR FAQ

- Real-World Experiences With OCR (The Good, the Bad, and the “Why Is This Receipt Gray?”)

- Conclusion

Optical Character Recognition (OCR) is the behind-the-scenes magic that turns “text trapped in a picture” into

text you can search, copy, highlight, edit, translate, and feed into software. If you’ve ever hit

Ctrl + F in a scanned PDF and actually found what you needed, you’ve benefited from OCR.

If you’ve ever hit Ctrl + F in a scanned PDF and nothing happened… you’ve also met OCR (on a bad day).

In plain terms: OCR is image-to-text. It looks at letters and numbers inside an image (like a scan,

photo, or screenshot) and converts them into digital characters your computer can understand.

OCR, Explained Like You’re Busy

A scanner (or your phone camera) captures a document as pixels. To your computer, that scan is basically a big

photographeven if it’s a contract, a recipe card, or your dentist’s “friendly” invoice.

OCR software analyzes those pixels, identifies shapes that look like letters, and outputs a text layer.

That’s why OCR is often used to create a searchable PDF: the original image stays visible,

but OCR adds an invisible (or selectable) text layer on top so you can search, select, copy, and assistive

technologies can read it.

Why OCR Matters More Than You Think

OCR is a key step in document digitization. It helps turn paper-heavy processes into searchable,

automatable workflowswithout forcing humans to retype everything (and without introducing creative spelling).

Everyday benefits

- Searchability: Find names, totals, dates, and keywords inside scanned PDFs and images.

- Editability: Convert printed text into editable text for documents and forms.

- Accessibility: Screen readers work far better when text is real text, not just an image.

- Automation: Extract text for indexing, compliance checks, routing, analytics, and archiving.

Business benefits

- Faster processing: Invoices, receipts, forms, and claims can be handled at scale.

- Fewer manual errors: People get tired; OCR doesn’t (but it does get confused by smudges).

- Structured data extraction: Modern “document AI” tools can identify tables, fields, and layout.

How OCR Works: From Pixels to Words

OCR isn’t one single stepit’s a pipeline. Older OCR systems leaned heavily on hand-tuned rules; modern OCR often

uses machine learning and deep learning models trained on huge datasets. Either way, the flow tends to look like this:

1) Image capture

The input could be a scan (TIFF/PDF), a phone photo (JPG/PNG), a screenshot, or even a frame from a video.

Clean inputs matter: higher resolution, better lighting, and less blur typically improve recognition quality.

2) Pre-processing

Before “reading” anything, OCR often cleans the image: de-skewing a crooked page, reducing noise, increasing contrast,

removing shadows, and sometimes converting to black-and-white (binarization). These steps can dramatically affect accuracy.

3) Layout analysis (a.k.a. “where is the text?”)

The software identifies text regions, separates columns, detects lines, and tries to understand structure.

Newer OCR services can detect layout elements like paragraphs, headings, and tables, which is crucial for documents

like invoices and reports.

4) Character/line recognition

This is the main event: the engine predicts characters or entire text lines from image patterns.

Many modern systems recognize text at the line level (not one character at a time),

which can be more accurateespecially for varied fonts and imperfect scans.

5) Post-processing

OCR output is often “cleaned up” using dictionaries, language models, pattern rules, and context.

Example: If the model sees “TotaI” (with a capital “I”) in an invoice, post-processing might correct it to “Total”

based on common words and document context.

Types of OCR: Printed Text, Handwriting, and “Why Is This Form Designed Like That?”

Printed-text OCR

This is the classic OCR use case: books, letters, typed contracts, receipts, and reports. Clean printed text with

good contrast is the easiest scenario.

Handwriting recognition (ICR/HTR)

Handwriting is harder because the “font” changes with every person and sometimes every mood.

Some tools support handwritten text extraction, but expectations should be realistic: neat block letters typically

outperform cursive, quick scribbles, and “doctor handwriting.”

Forms and tables

A big leap in recent years is OCR that understands structureforms, key-value pairs, and tables.

This goes beyond basic text recognition into document analysis: not just “what does it say?” but “what does it mean

and where does it belong?”

OCR vs. Similar Technologies (So You Don’t Accidentally Buy the Wrong Buzzword)

OCR vs. OMR

OCR reads letters and numbers. OMR (Optical Mark Recognition) detects markslike filled bubbles on standardized tests.

If you’re trying to read a checkbox, OCR may help, but OMR is the classic “bubble sheet” specialist.

OCR vs. barcode/QR scanning

Barcodes and QR codes are designed to be machine-readable. OCR is used when humans wrote/printed text for humans

and you’d now like computers to join the party.

OCR vs. “document understanding”

OCR extracts text. Document understanding tries to identify fields, entities, and relationships (like invoice totals,

vendor names, due dates). Many modern platforms bundle OCR with higher-level extraction.

What Affects OCR Accuracy?

OCR accuracy is not a personality traitit’s a result of inputs, settings, and context. Here are the most common

factors that decide whether OCR feels like wizardry or like a prank:

Image quality

- Resolution: Low DPI scans can blur character edges, making “8” look like “B.”

- Blur and motion: Phone photos taken while walking are basically abstract art to OCR.

- Lighting and shadows: A shadow across text can turn letters into mystery blobs.

- Noise and compression: Heavy JPG artifacts can create fake “edges” that confuse recognition.

Typography and layout

- Font choice: Decorative fonts are fun for invitations and terrible for machines.

- Small text: Tiny print on receipts and medicine labels is a common failure point.

- Columns, tables, and multi-page structure: Complex layouts need strong layout analysis.

Language and context

- Mixed languages: Documents with multiple languages require robust language support.

- Domain terms: Names, part numbers, and medical terms may need custom vocabularies.

- Expected patterns: Dates, currency, and IDs can be validated with pattern rules.

How accuracy is measured

OCR quality is often discussed using error rates (for example, character-level error rates) and field-level success

in forms. In practical workflows, teams also track business metrics like “how many documents needed manual review”

and “how often did totals extract correctly.”

Common OCR Use Cases (With Concrete Examples)

1) Making scanned PDFs searchable

You scan a 40-page lease agreement. Without OCR, it’s an image-only PDF. With OCR, you can search “termination,”

copy clauses into an email, and highlight important sections.

2) Receipts and expense reports

OCR pulls merchant names, dates, and totals so finance software can auto-fill expense entries. The tricky part is

that receipts are often crumpled, faded, and printed in microscopic fontbasically OCR’s obstacle course.

3) Invoices, forms, and back-office automation

OCR combined with layout understanding can extract structured data (like line items or key-value pairs) from invoices,

purchase orders, and application forms. This reduces manual data entry and speeds up approvals.

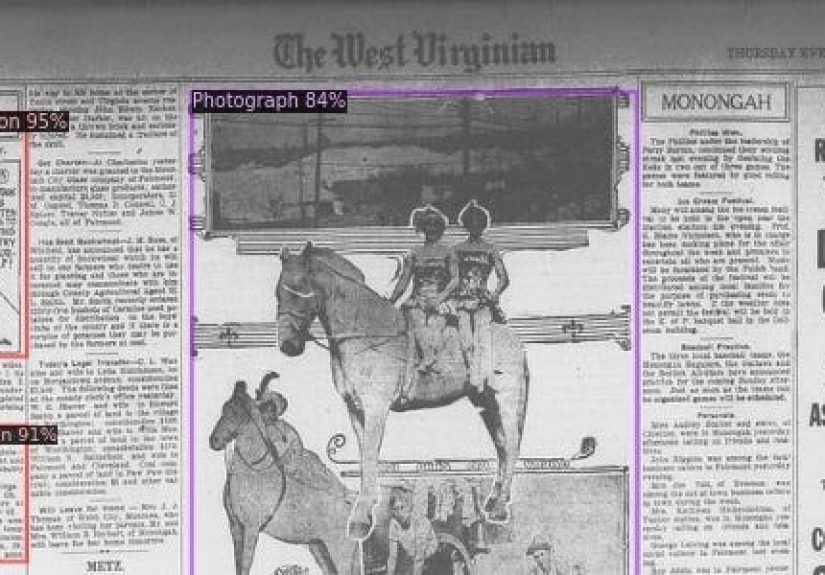

4) Archives, libraries, and historical documents

Large digitization projects use OCR so people can keyword-search newspapers, books, and records. Historical documents

add unique challenges: old fonts, damaged pages, uneven ink, and sometimes creative 19th-century spelling.

5) Accessibility and compliance

OCR helps convert image-based documents into formats that assistive technologies can read. It can be an important step

toward making PDFs and digital content more accessible.

OCR in Real Life: Practical Tips That Save Your Sanity

Get the best scan you can (before you “fix it in software”)

- Use good lighting and avoid shadows if photographing documents.

- Keep the page flat; wrinkles create warped letters.

- Capture at higher resolution when possible (especially for small print).

- Crop to the pagebusy backgrounds create false positives.

Choose the right tool for the job

If your goal is “make my PDF searchable,” a PDF tool with OCR is usually enough. If your goal is “extract totals,

table cells, and form fields,” you want OCR plus document analysis.

Expect a human-in-the-loop for high-stakes data

For compliance, medical records, legal documents, and financial totals, the safest workflows treat OCR output as

“draft data” that can be reviewed. The better your validation rules and exception handling, the less review you need.

Security, Privacy, and “Should We Upload This?”

OCR often involves sensitive documents: IDs, invoices, contracts, medical forms, and HR paperwork.

If you’re using cloud OCR, your policy decisions matter:

- Data handling: Know whether documents are stored, logged, or used for service improvement.

- Access control: Restrict who can view raw documents and extracted text.

- Redaction: Consider redacting sensitive fields before storage or sharing.

- On-prem options: Some platforms support containerized or local deployments for tighter control.

Bottom line: OCR turns images into searchable text, which can make sensitive content easier to findfor you and,

if you’re careless, for the wrong people. Treat extracted text with the same care as the original document.

OCR FAQ

Does OCR work on handwriting?

Sometimes. Many tools can extract some handwritten text, especially neat printing. Messy cursive and rushed notes

can still be difficult. If handwriting is a major requirement, test with your real samples before committing.

Can OCR read tables?

Basic OCR may capture the text inside a table but lose structure. More advanced document analysis can preserve table

relationships (rows/columns/cells) and output structured results.

Why does OCR confuse “O” and “0” (and “l” and “1”)?

Those characters are visually similar, especially in certain fonts or low-resolution scans. Context helps, but not

always. For IDs, serial numbers, and totals, validation rules can catch common mistakes.

Is OCR the same as “text extraction” from PDFs?

Not exactly. If a PDF already contains real text (like a digitally generated PDF), you can extract it without OCR.

OCR is needed when the PDF is image-only (like a scan).

Real-World Experiences With OCR (The Good, the Bad, and the “Why Is This Receipt Gray?”)

OCR sounds simple until it meets real documents, which rarely behave like clean demo samples. In practice, teams

often discover that OCR success is less about the engine’s “IQ” and more about the workflow around it.

For example, a common first win is converting a pile of scanned PDFs into searchable documents. That feels like

instant productivity: keyword search starts working, copy/paste becomes possible, and people stop retyping names

from screenshots like it’s 1999. But the honeymoon phase can end when someone scans a 200-page binder at low

resolution, slightly tilted, with a coffee stain on page 37. Suddenly “searchable” becomes “searchable-ish,” and

“copy text” becomes “copy modern art.”

Another frequent experience: receipts. Receipts look harmlessuntil you realize they’re often printed with tiny,

heat-sensitive text that fades over time. Add a wrinkled paper surface and low contrast, and OCR may confidently

declare that your lunch total was “$8S.40.” (Was it? Did you accidentally cater for 30 people?) Teams that process

receipts at scale usually learn to set guardrails: validate totals against expected currency patterns, flag

suspicious outputs, and route unclear cases for review. The lesson is consistent: OCR is powerful, but it likes

rules, structure, and a safety net.

Forms and tables introduce a different flavor of chaos. Even if OCR reads every word correctly, it might place the

words in the wrong order or detach them from their fields. Real workflows often evolve from “extract everything”

to “extract what matters.” Instead of capturing every character, they focus on key fieldsinvoice number, date,

total, vendorand use layout-aware extraction. The result is usually faster, more accurate, and easier to audit.

There’s also the “history problem.” When digitizing older books, newspapers, and archival documents, the challenge

isn’t just faded inkit’s typography, page damage, and unusual spelling. Many projects discover that OCR output can

be improved with post-processing tuned to the collection: custom dictionaries, cleanup rules, and sometimes even

re-running OCR with updated models. The experience here is almost philosophical: OCR isn’t a one-time event; it can

be an ongoing improvement loop.

The most useful mindset shift is to treat OCR as a component, not a miracle. The best outcomes come when OCR is

paired with thoughtful capture (good scans), smart validation (pattern checks), and clear exception handling

(human review for edge cases). Do that, and OCR becomes a reliable workhorseturning “paper stuck in pixels” into

searchable, usable data that actually moves work forward.