Table of Contents >> Show >> Hide

- The short answer: reports don’t delete accountsviolations do

- What happens when you report a post or profile?

- So… how many reports does it take to delete an account?

- Why some accounts vanish quickly (and others don’t)

- Report types that most commonly lead to account removal

- How long does it take Instagram to act on reports?

- If your account is getting reported: how to protect yourself

- If you need to report someone: do it responsibly (and effectively)

- When to escalate beyond Instagram

- FAQ: Quick answers people actually want

- Conclusion: the “report count” myth vs the real system

- Real-World Experiences: What People Notice When Reporting (and Being Reported)

- Experience #1: The impersonator who disappeared fast

- Experience #2: The scammer who wouldn’t quit (until the pattern became obvious)

- Experience #3: The bullying account that got limited, not deleted

- Experience #4: The small business hit by false reports

- Experience #5: The “nothing happened” report that still mattered

Let’s bust the biggest Instagram myth in one sentence: there’s no secret “magic number” of reports that automatically deletes an Instagram account. If there were, the internet would treat it like a cheat codeand Instagram would have to rename itself “Deletegram.”

So what actually happens when people report an account? Why do some accounts disappear fast while others seem to survive a thousand angry taps? And how can you protect your own account if you’re getting reported (fairly or unfairly)? This guide breaks it down in plain English, with real-world examples and a few reality checks sprinkled in for flavor.

The short answer: reports don’t delete accountsviolations do

Instagram’s enforcement is based on policy violations, not popularity contests. Reports are basically “Hey, Instagramplease review this.” If the account (or content) breaks the rules, action may follow. If it doesn’t, reporting it repeatedly won’t magically turn it into a violation.

That’s why you’ll hear two totally different stories:

- “My friend’s impersonator got removed in a day.”

- “I reported a scammer five times and they’re still posting ‘DM me to get rich.’”

Both can be truebecause the outcome depends on what’s being reported, how clear the evidence is, and how Instagram’s systems classify it.

What happens when you report a post or profile?

When you report something on Instagram, you’re sending it into a moderation pipeline. The review may involve automated detection, human review, or a combination. Possible outcomes include:

- No action (Instagram doesn’t find a violation).

- Reduced visibility (content may be ranked lower in feeds/explore if it’s borderline or spammy).

- Content removal (a post/story/comment gets taken down).

- Account restrictions (features limited, reach reduced, or temporary limits applied).

- Account disabled (for severe violations or repeated violations over time).

Also, reports are generally anonymous to the account being reportedexcept in certain cases like intellectual property reports, where additional information may be required.

So… how many reports does it take to delete an account?

Here’s the truth most people don’t want to hear: Instagram doesn’t publish a required number of reports, and there isn’t a public “X reports = deletion” rule. Platforms avoid sharing exact thresholds because it makes the system easier to game.

What reports can do is increase the odds that something gets reviewed fasterespecially when:

- Multiple people report the same clear violation (not just “I don’t like this person”).

- The report category matches what’s actually happening (spam reported as spam, impersonation reported as impersonation, etc.).

- The reported behavior looks like a pattern (e.g., mass scam messages, repeated harassment, repeated policy-breaking posts).

Translation: It’s not about how many reports you stack like pancakes. It’s about whether the account is serving policy violations as the main course.

Why some accounts vanish quickly (and others don’t)

1) Severity matters more than volume

Some violations are treated as high-risk or high-severity. In those cases, Instagram may take strong action quickly if the violation is clear. Examples include certain forms of impersonation, serious scams, and other safety-related violations. Meanwhile, borderline content might take longeror result in smaller actions like reduced reach or a warning.

2) The account’s history is a big deal

Instagram considers whether an account has prior enforcement actions or “strikes.” Think of it like a driver’s record: one questionable lane change might get a warning, but repeated reckless driving eventually gets your keys taken away.

Meta’s enforcement approach across its platforms includes escalating consequences when violations continueespecially after warnings or restrictions.

3) Evidence beats outrage

Reports that clearly match a policy category tend to be easier to review. If you report “spam,” and the account is blasting identical messages, that’s a clean match. If you report “hate” because someone posted a hot take you dislike, the review likely ends in “no violation.”

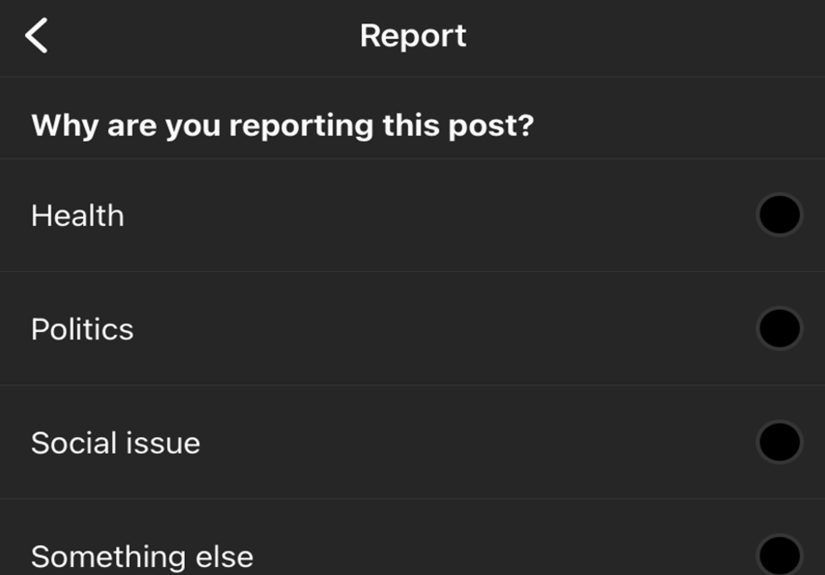

4) Different report types go through different lanes

Not all reports are equal. Reporting a random profile for “It’s spam” is different from filing an impersonation report or an intellectual property complaint. Some issues use specialized forms and may require documentation.

Report types that most commonly lead to account removal

Account removal typically happens when there’s either a severe violation or repeated violations. These categories often matter:

Impersonation (especially “pretending to be me”)

Impersonation is one of the most straightforward categories when you can prove identity. Instagram may ask for verification (like an ID) in some cases, which can speed up decisions because it provides hard evidence.

Scams, fraud, and spam behavior

Accounts pushing fake giveaways, “investment” DMs, shady links, or mass-spam behavior can trigger restrictions or removalespecially if the behavior is repetitive and clearly deceptive.

Harassment and bullying patterns

A single rude comment might be handled differently than an account dedicated to targeting someone repeatedly. Instagram encourages reporting harassment and bullying, and repeated behavior can lead to escalating penalties.

Privacy violations (like exposed private information)

If someone posts private info (think phone numbers, addresses, or other personal details), that’s often treated seriously because it can create real-world harm.

Intellectual property (copyright/trademark) takedowns

IP reports are a separate category and usually require the rights owner (or an authorized representative) to submit the claim. This is one reason “just report it a bunch” doesn’t workbecause some actions require a specific kind of report from a specific kind of person.

How long does it take Instagram to act on reports?

There’s no universal clock. Some actions can happen quickly, while others take longer depending on volume, complexity, and whether additional verification is needed. A few practical notes:

- You can sometimes view report status in your Support Requests area, but not every report will show up.

- Some decisions (especially content removals) can show up in Account Status for the person whose content was impacted.

- Appeals and reviews may be available for removed content or disabled accounts.

If your account is getting reported: how to protect yourself

If you’re worried about being reportedwhether due to misunderstandings, jealous competitors, or the internet being… the internetfocus on what actually helps.

1) Check Account Status and fix anything questionable

Instagram provides tools to show if content was removed and whether your account is at risk of additional penalties. If something was removed and you believe it was a mistake, you may be able to request review.

2) Avoid third-party “growth” tools

Many accounts get restricted or disabled because of suspicious activity tied to automation tools, aggressive follow/unfollow behavior, or services that violate platform rules. If your account suddenly acts like a bot, Instagram may treat it like one.

3) Secure your account (because hacks look like “violations” sometimes)

A compromised account can start posting spam or sending scam DMsthen people report it, and the account gets punished. Protect yourself by:

- Using a strong, unique password

- Turning on two-factor authentication (2FA)

- Watching for suspicious logins and emails

- Removing unknown connected apps/services

4) Keep receipts (screenshots, dates, context)

If you’re targeted by false reports, documentation helpsespecially if you need to appeal a decision or prove impersonation/harassment patterns.

If you need to report someone: do it responsibly (and effectively)

Reporting is meant to improve safetynot to “win” an argument. If you’re reporting harmful content, here’s what genuinely increases the chance of the right outcome:

- Pick the correct category (spam vs harassment vs impersonation vs privacy).

- Report the most clear examples (the posts/messages that show the violation, not just vibes).

- Use official forms for impersonation, privacy, and IP when needed.

- Avoid coordinated mass-reporting campaignsthey’re often viewed as abuse of the system and don’t guarantee action anyway.

Important: False reporting can backfire. If the system detects coordinated abuse (or if you repeatedly file misleading reports), you risk restrictions on your own account.

When to escalate beyond Instagram

If you’re dealing with a scam, fraud attempt, or serious online threat, reporting inside Instagram is a good startbut sometimes you should also report to official channels. In the U.S., people commonly use:

- FTC ReportFraud for scams and fraud patterns

- FBI IC3 for internet crime complaints, especially if money or identity theft is involved

If you’re a teen and something feels scary or unsafe, involve a trusted adult (parent/guardian/teacher) and save evidence. That’s not “overreacting”that’s being smart.

FAQ: Quick answers people actually want

Does Instagram delete accounts after 10 reports?

No. There’s no public rule like that. An account may be removed after one report if the violation is severe and obviousor it may survive many reports if there’s no violation.

Can one report get an account deleted?

It can lead to review, and in rare cases a severe violation may result in rapid action. But the report itself isn’t the “delete button.” The violation is what matters.

Why does Instagram say “no violation” when it looks obvious to me?

Sometimes it’s context, sometimes it’s limited evidence, and sometimes the platform simply misses things. Reporting the clearest examples (and using the right reporting lane) helps.

Can you see who reported you?

Typically no. Reports are generally anonymous to the person being reported, with limited exceptions like certain intellectual property processes.

If my account gets disabled, can I appeal?

Often yes. Instagram provides review/appeal options in many cases, including account status tools and review requests for removed content.

Conclusion: the “report count” myth vs the real system

If you remember only one thing, make it this: Instagram doesn’t run on a report counterit runs on enforcement decisions. Reports are signals. Policies are the rules. Violations are the reason accounts get restricted or removed.

So instead of asking, “How many reports does it take?” ask:

- Is there a clear violation of Instagram’s rules?

- Is this the right report type (spam, impersonation, harassment, privacy, IP)?

- Do I have clear evidence, not just frustration?

- If I’m being targeted, am I checking Account Status and securing my account?

That approach won’t just help you understand the systemit’ll save you time, stress, and a whole lot of angry tapping.

Real-World Experiences: What People Notice When Reporting (and Being Reported)

To make this topic less abstract, here are realistic “what it felt like” experiences people commonly describe when dealing with Instagram reports. These aren’t meant as a how-to for getting someone removedthink of them as a field guide for what tends to happen in the wild.

Experience #1: The impersonator who disappeared fast

A creator notices a new account using their name, profile photo, and even reposting their content. Friends start DMing: “Is this you?” The creator reports it as impersonation and uses the official impersonation lane (not just “spam”), including proof of identity when requested. The result is often faster because the claim is simple to verify: the account is pretending to be someone else, and there’s documentation to back it up. The big takeaway people mention: it wasn’t the number of reportsit was the clarity of the violation and the ability to verify identity.

Experience #2: The scammer who wouldn’t quit (until the pattern became obvious)

Someone’s aunt gets a DM from an account claiming she “won” a giveaway. The account asks for a shipping fee (red flag), then asks for more (bigger red flag wearing a neon hat). Multiple people report it. At first, nothing seems to happenbecause scam accounts can pop up, change usernames, or delete messages that are hard for reviewers to see. But once enough signals pile upreports, blocked users, repeated identical messages, suspicious linksaccounts like this often get restricted or removed. The lesson people repeat: single incidents can be hard to verify, but repeated behavior creates a trail that is easier to act on.

Experience #3: The bullying account that got limited, not deleted

A student is targeted by an account posting rude comments and trying to embarrass them. The student reports the account and also blocks it. Sometimes the account doesn’t vanish overnight; instead, the person notices smaller effects first: comments disappear, the account can’t message as easily, or content gets removed. Deletion is more likely when harassment is persistent or escalates, but many people experience a middle step: restrictions before full removal. The practical tip they share is simple: block early, save screenshots, and report specific comments/posts that clearly violate rulesbecause “this person is mean” is harder to evaluate than “here is the exact harassment.”

Experience #4: The small business hit by false reports

A small shop runs a promo, and suddenly their posts get flagged. Sometimes it’s a competitor, sometimes it’s misunderstanding, and sometimes it’s just unlucky timing with automated systems. The owner panics because reach drops or a post is removed. People in this situation often say the best move was checking Account Status, cleaning up anything that could be misinterpreted (copyrighted music, misleading claims, risky hashtags), and securing the account. When something is removed unfairly, requesting review can help. Their big “wish I knew this sooner” moment: mass reporting doesn’t guarantee deletion, but account health and compliance really do matter.

Experience #5: The “nothing happened” report that still mattered

Someone reports a creepy spam account and gets no satisfying closure. They assume reporting is useless. But what people don’t always see is that reports can still feed detection systemsespecially for spam networks. The report might not delete the account immediately, but it can contribute to bigger enforcement later. The best mindset shift: treat reporting like adding a puzzle piece, not pulling a lever that instantly drops a trap door.

Overall pattern across these experiences: when violations are clear, documented, and reported through the correct channel, action is more likelyand sometimes faster. When things are vague, borderline, or hard to verify, outcomes can feel slower or inconsistent. And in all cases, there’s no reliable “number of reports” that works like an on/off switch.