Table of Contents >> Show >> Hide

- The new admissions arms race (and why it’s accelerating)

- Side A: The applicant with a robot co-writer

- Side B: The admissions office with a robot reader

- What an “AI-driven admissions disaster” actually looks like

- How to prevent the crash: practical fixes that don’t require a time machine

- 1) Make the rules explicit and reality-based

- 2) Redesign prompts to reward specificity that AI can’t fake cheaply

- 3) Use more structured, mission-aligned human evaluation

- 4) If AI is used, keep humans accountableand the system auditable

- 5) Shift emphasis toward assessments that are harder to outsource

- What applicants should do (ethically) in an AI-shaped world

- Conclusion: AI can help admissions, but it can’t replace judgment

- Experiences from the front lines (the extra everyone asked for)

Picture this: you pour your heart into your medical school application, hit submit, and the first “person” to judge your life’s work is…

a silicon-powered résumé goblin. Not a dean. Not a physician. Not even a sleep-deprived fourth-year with a lukewarm coffee.

A machine. Welcome to the newest admissions arms race: applicants using generative AI to write faster, and schools using AI to read faster.

It’s efficient. It’s modern. It’s also one bad policy away from turning “holistic review” into “the algorithm has vibes.”

To be clear: AI itself isn’t the villain twirling a mustache. The real danger is the combination of (1) sky-high application volume,

(2) incentives that reward speed and polish over authenticity, and (3) black-box tools that can quietly amplify bias while everyone

congratulates themselves for being “data-driven.” If we don’t slow down and set rules that actually make sense, medical school admissions

could drift into a system where the best applicants aren’t the most prepared to be physiciansthey’re the most prepared to be prompt engineers.

The new admissions arms race (and why it’s accelerating)

Medical school admissions has always had a volume problem, but it’s getting sharper. Recent AAMC reporting shows applications rose in 2025,

driven largely by first-time applicants. Meanwhile, schools have limited seats, limited reviewers, and limited patience for reading

thousands of nearly identical “I learned empathy from volunteering” paragraphs.

Generative AI pours gasoline on that pressure in two ways:

- Applicants can produce more essays, faster. Secondary applications can multiply like gremlins after midnight. AI makes it easier to keep up.

- Schools feel forced to automate triage. When humans can’t read everything carefully, automation starts to look like oxygen.

The scary part isn’t that machines existit’s that both sides are incentivized to use them more each year. That’s how you get an arms race:

you don’t have to love it, you just have to fear falling behind.

Side A: The applicant with a robot co-writer

Essays were already “coached.” AI just industrializes the coaching.

Let’s be honest: “help” with applications is not new. Students have always had mentors, advisors, and sometimes pricey consultants.

Generative AI doesn’t invent assistance; it scales it. Instead of getting feedback from one advisor on one draft, an applicant can generate

twenty drafts before lunch, then ask for a “more compelling narrative arc” like they’re workshopping a Netflix pilot.

That raises a fundamental question: what are essays for?

- If essays are meant to test writing ability, AI undermines the point.

- If essays are meant to reveal authentic motivation and reflection, AI risks replacing the applicant’s voice with a polished average.

- If essays are meant to show communication and professionalism, AI can still fitif used transparently and responsibly.

The problem is that admissions committees often treat essays as all three at once, without saying which one matters most.

When the rules are fuzzy, applicants do what applicants do: optimize for what gets accepted.

AMCAS doesn’t ban AIbecause banning it would be a fantasy novel

A key detail many people miss: the AMCAS certification language explicitly acknowledges that applicants may use AI tools for

brainstorming, proofreading, or editing, while still certifying that the final submission is the applicant’s own work and reflects

their experiences. In other words, the system is already trying to draw a line between “assistance” and “authorship.”

That’s sensible in theory, but messy in practice. Because “brainstorming” can mean anything from “help me outline my story”

to “write my story and I’ll change three adjectives.” And once an essay is cleaned up by an AI that mimics human tone,

schools can’t reliably tell where the applicant ends and the machine begins.

The equity twist: AI can help some applicants… and still widen the gap

There’s a hopeful version of this story: AI lowers barriers for applicants who don’t have elite advising, who are first-generation,

or who speak English as a second language. A tool that helps with clarity and grammar can be a legitimate equalizer.

There’s also a darker version: applicants with more resources can stack AI on top of premium coaching, turning “assistance” into

a full-blown content production pipeline. If schools respond by adding more paid assessments, more hoops, and more surveillance,

the applicants with fewer resources lose againjust in a different chapter.

Side B: The admissions office with a robot reader

AI triage is already happening

This isn’t a future scenario. Some schools have openly discussed using AI as an initial screener. For example, AAMC reporting describes

an AI system acting as a first reader for thousands of applications at Hofstra/Northwell’s Zucker School of Medicine, helping sort

applications into categories for further human review and interview consideration. Other institutions have explored similar approaches.

On paper, that sounds like a win: faster processing, more consistency, fewer exhausted humans making snap judgments at 11:47 p.m.

But “consistent” isn’t automatically “fair.”

Machine learning learns from historyincluding the parts we wish weren’t true

Here’s the core risk: screening models are often trained on past decisions. If past decisions reflect structural inequities,

prestige bias, or inconsistent rubrics, the model may reproduce those patterns at scale. Even when developers add guardrails,

the model can latch onto proxiessignals that correlate with acceptance but shouldn’t matter.

Research on AI screening in medical school admissions has shown feasibility and promising performance metrics in specific settings,

including attempts to reduce inter- and intra-reviewer variability and to replicate faculty screening outcomes. That’s important,

but it comes with caveats: feasibility is not the same as justice, and accuracy is not the same as validity.

And then there’s the “quiet proxy” problem: AI systems can overvalue patterns that appear harmless but are socially loaded

like certain activity descriptions, institutional signals, or language styles that track with privilege.

The AAMC itself has warned that any AI use should be balanced with human judgment and subjected to the same scrutiny used for

traditional selection tools, emphasizing concerns about privacy, fairness, transparency, and validity.

“We’ll just detect AI-written essays” is not a reliable safety plan

In many academic contexts, AI-detection tools have been criticized as unreliable, with risks of false positives and weak evidentiary value.

If schools treat detection as a courtroom-grade lie detector, they could end up penalizing honest applicants (including those who

write in a “too polished” style naturally, or those who receive legitimate editing help).

A detection arms race also misses the point: the goal shouldn’t be to punish applicants for using tools the broader system

increasingly normalizes. The goal should be to redesign admissions so authenticity and readiness are evaluated in ways that don’t

collapse under automation.

What an “AI-driven admissions disaster” actually looks like

The disaster scenario isn’t one dramatic moment. It’s a slow drift into a process that looks professional but behaves unfairly.

Here are the most likely failure modes:

-

Homogenized applications. Essays converge into the same tone: reflective, earnest, blandly competent.

Schools lose signal, so they add more requirements, making the process even noisier. -

Algorithmic gatekeeping. AI triage quietly shapes who gets seen by humans, and minor modeling choices

become major life outcomes. - Bias at scale. Even small skewsby school, region, socioeconomic proxy, or language stylecompound when applied to tens of thousands of applications.

-

Trust collapse. Applicants suspect the process is rigged by tech. Schools suspect applicants are outsourcing their identities.

Everyone becomes a little more cynical, and cynicism is a terrible ingredient in medicine. - Privacy and consent confusion. Sensitive application materials become training data, vendor data, or “analytics” in ways applicants never truly understood.

If this feels dramatic, remember: admissions already operates under intense pressure. AI doesn’t create the pressure;

it just multiplies the consequences of bad design.

How to prevent the crash: practical fixes that don’t require a time machine

1) Make the rules explicit and reality-based

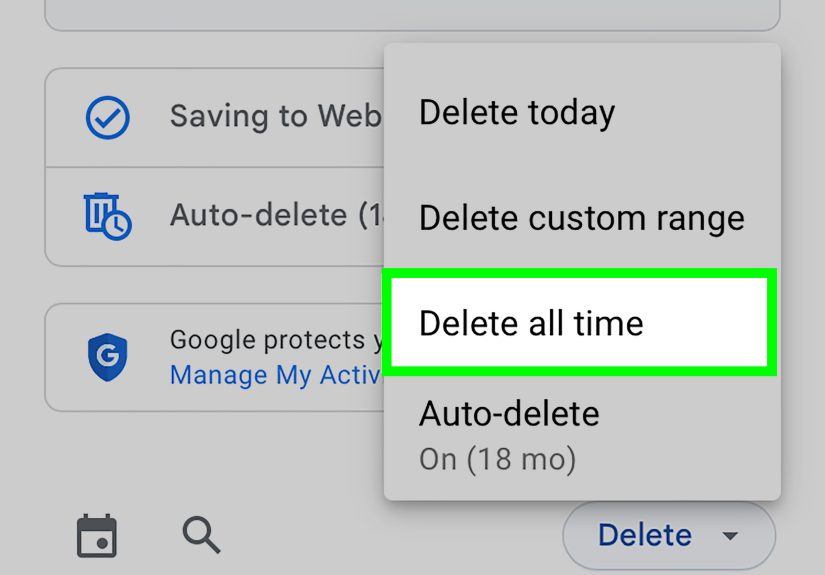

If applicants are permitted to use AI for brainstorming and editing (as AMCAS language suggests), schools should align their expectations

with that reality. Not with vague “be authentic” slogans, but with clear boundaries:

- Allowed: grammar cleanup, clarity improvement, outline feedback, idea generation.

- Not allowed: inventing experiences, fabricating achievements, ghostwriting the narrative voice.

- Encouraged: keeping notes, drafts, and reflections that demonstrate ownership of the story.

2) Redesign prompts to reward specificity that AI can’t fake cheaply

Instead of broad prompts (“Why medicine?”), use prompts that require concrete, verifiable detail and reflection:

specific moments, tensions, tradeoffs, and lessons that connect to a demonstrated track record.

AI can imitate insight, but it struggles to generate grounded nuance without real input.

3) Use more structured, mission-aligned human evaluation

The AAMC has long emphasized mission-aligned selection (often discussed under the umbrella of holistic review),

balancing experiences, attributes, competencies, and metrics. Structure matters: clear rubrics, trained readers,

and consistent scoring reduce the temptation to outsource judgment to tools.

4) If AI is used, keep humans accountableand the system auditable

AI can support triage, but schools should treat it like a high-stakes clinical tool: validate it, monitor it, and document it.

That means:

- Transparency: tell applicants when AI is used and what it does (triage? summarization? tagging?).

- Human oversight: admissions committees retain responsibility for decisions, not vendors or models.

- Bias testing: routinely evaluate performance across demographic groups and applicant contexts.

- Appeals and review: have pathways to re-review borderline cases without “the model said no” as the final word.

5) Shift emphasis toward assessments that are harder to outsource

Schools already use interviews and structured formats like Multiple Mini Interviews (MMIs) and situational judgment approaches

to evaluate reasoning, communication, and professionalism. Tools like the AAMC PREview exam exist to assess professional readiness

competencies in a standardized way. No method is perfect, but a balanced approach reduces overreliance on essays as the “main signal.”

What applicants should do (ethically) in an AI-shaped world

If you’re applying, the safest strategy is to treat AI like a helpful editor, not a substitute self.

That means:

- Start with your own raw material: bullet points, memories, specific stories, and what you actually learned.

- Use AI for clarity, not invention: ask for better structure, tighter sentences, or simpler wordingthen rewrite in your voice.

- Stay consistent: if your essay voice doesn’t match how you speak in interviews, you’re creating risk for yourself.

- Never fabricate: invented experiences can become a professionalism issue that follows you far beyond admissions.

The goal is simple: make your application more readable without making it less real.

Conclusion: AI can help admissions, but it can’t replace judgment

Medical school admissions is trying to solve a real problem: too many applications, too little time, and too much at stake.

AI can help organize information, reduce reviewer variability, and speed up logistics. But if it becomes a substitute for

mission-driven human judgmentor if applicants feel forced to outsource their voices to competewe risk building a process that is

efficient, polished, and quietly unfair.

The fix isn’t to ban tools everyone will use anyway. The fix is to redesign admissions so authenticity survives contact with automation:

clearer rules, better prompts, structured review, validated tools, and transparency that treats applicants like future colleagues,

not data points.

Experiences from the front lines (the extra everyone asked for)

To understand why this feels like a slow-motion disaster, it helps to look at the lived “application season” experiencewhat applicants,

advisors, and admissions staff report seeing in real time. The stories below are composites, but the patterns are very real.

Experience #1: The secondary-essay treadmill

An applicant gets their first secondary email and feels a tiny adrenaline spike. Then the second arrives. Then the third.

By the end of the week, it’s a parade: “Describe a challenge.” “Why our school?” “Explain a time you worked on a team.”

In previous years, the applicant might have needed days per essay, leading to tough choices: fewer schools, fewer chances.

Now, generative AI makes it tempting to respond at machine speed. The applicant feeds in the prompt, gets a clean draft,

tweaks it, submits it, and repeatslike an assembly line staffed by anxiety.

The emotional tradeoff is subtle: the faster the process gets, the less time the applicant spends actually reflecting.

Essays become transactions instead of self-examination. The applicant doesn’t feel like they’re cheating; they feel like they’re surviving.

And survival mode is not where your best, most honest writing tends to live.

Experience #2: The advisor trying to teach “voice” in a world of perfect grammar

Advisors increasingly describe a new kind of draft: technically excellent, emotionally vague. It reads like someone who has learned

the shape of sincerity without experiencing it. The paragraphs flow. The transitions sparkle. The story… could belong to anybody.

The advisor’s job shifts from “fix the writing” to “recover the person.”

The best advising conversations now sound less like English class and more like therapy-lite (the healthy kind):

“What did that moment actually feel like?” “What changed because of it?” “What would you do differently now?”

Because in an AI era, specificity becomes the rarest currency.

Experience #3: The admissions reader drowning in polished sameness

On the other side, an admissions committee member opens application after application that’s “good.” Not great. Not terrible. Just…

flawlessly competent. The reader starts craving imperfectionnot errors, but humanity: a distinctive perspective, a hard-earned lesson,

a moment of uncertainty that turned into growth. Instead, they get beautifully written paragraphs that could be swapped between applicants

without anyone noticing.

This is where AI screening becomes seductive. If essays aren’t differentiating, why not let a model extract themes and triage for speed?

But that’s also where the risk spikes: the system may start relying more on proxies (school name, metrics, activity labels),

exactly the kind of signals holistic review was meant to balance. The reader doesn’t want to be unfair; they just want the pile to shrink.

And that’s how “efficiency” quietly becomes the guiding value.

Experience #4: The interview as the truth serum (that still isn’t perfect)

Applicants sometimes arrive at interviews with essays that sound like a different person. It may not even be malicious.

It can be as simple as: the essay got polished into a voice that feels “professional,” but the applicant speaks more plainly in real life.

Interviewers notice the mismatch and wonder what it means. Was the essay overly coached? Was it AI-assisted? Or is the applicant just nervous?

The ambiguity is exhausting for everyone.

The takeaway from these experiences isn’t “ban AI.” It’s that admissions needs a redesign that keeps humans recognizable on both sides:

applicants as real people with real stories, and schools as real institutions making accountable decisionswithout outsourcing trust to a tool.